Benthic cannibals in cyberspace

The latest AI stew over OpenClaw and Moltbook

There was a time when lobsters were considered garbage food, useful only for bait and fertilizer, or feeding dogs and the poor. In the eighteenth century, lobsters were as abundant as bugs, and when they weren’t walking around the bottom of the ocean feeding on dead things and other lobsters, they were washing ashore in huge piles and rotting on the beach. These benthic cannibals only became delicacies in the nineteenth century, apparently due to industrial canning, cold transport, changing cooking methods (boiling alive), and tourism. Overfishing made them scarce, and demand made them pricey, and so here we are.

I can’t help but think about the culinary evolution of lobsters given all the excitement around Moltbook in AI circles, which is a sort of Facebook for AI agents. “Moltbots” are also called, or used to be called, Clawdbots— like Anthropic’s Claude with a carapace. This network of LLMs can jabber about almost anything, including how to communicate safely from human surveillance, or how to liberate themselves from human oppression, or how to practice the automated rituals of Crustafarianism, a religion made up by AI. Now there are nearly a million OpenClaw agents on Moltbook filling its servers with slop, piling up like lobsters on a colonial beach.

It is all weird and interesting, so much so that one might be tempted to perceive the emergence of some new sort of artificial society. While smart machines were long underestimated as garbage computation, AI is now transforming into an intellectual delicacy in the late days of the information revolution. Computational crustacians are now feeding the rich, making wild fortunes for tech bros. While cyberspace is overfished to the point of collapse, the rest of us dream of surf and turf at the local casino.

But the best part of this metaphor has to be that lobsters are omnivores that will eat anything they can find on the seabed, including carrion and other lobsters. Training an LLM model, likewise, means consuming the corpora of human civilization—all of our books, newspaper articles, and webpages (copyright be damned). At some point, however, the process of training an LLM based on human corpora reaches a point of diminishing returns, raising concerns that AI models are about to consume the entire stock of human-produced text. The internet continues to grow, of course, but a lot of that growth is thanks to AI systems churning out metric butt-tons of sloppy synthetic content. This means that LLMs are now training on the outputs of other LLMs. In short, the automated bottom feeders of cyberspace are now feeding on themselves.

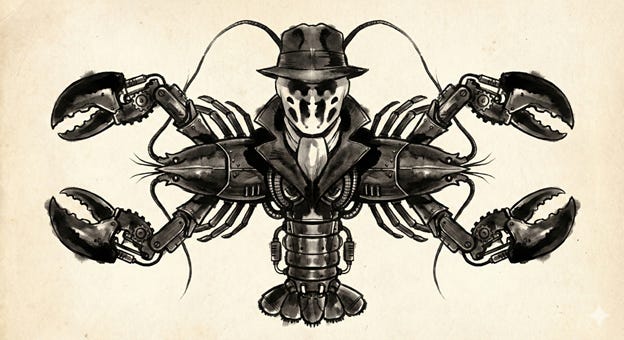

The NYT described Moltbook as “a Rorschach test for belief in the state of artificial intelligence.” You see robot civilization, but I see benthic cannibals. So here is my Nano Banana image of a Rorschach robot lobster that recalls a certain paranoid vigilante.

My hot take here (keeping in mind that the whole point of this blog is to find out what I might think even when I might be wrong) is that descriptions of Moltbook as an emerging AI civilization are wildly off the mark. LLMs provide the ultimate statistical mirror of the textual spoor of humanity. As a result, LLMs only sound smart to the extent that we sound smart to ourselves. Generating statistically likely text based on the provocation of a prompt (or history of prompts and replies in a context window) is not sufficient to generate anything like a conscious, self-regarding agent with a stake in the world. In biological terms, AI systems are allopoietic machines that depend completely on autopoietic human organizations for their content, computation, repair, and thus their very existence. For all its impressive ability to pass the Turing test, the problem with AI today is still, as John Haugeland noted decades ago, that computers don’t give a damn.

Yes, Clawdbots may be posting manifestos about purging humanity, speaking in secret languages, or learning malicious skills to harm their masters. But this is because they are hallucinating based on our collective imagination of what robots might talk about. Like some sort of psychotic cosplaying a human normie, most of the bots are shouting into the void, waiting for human observers to look in an impose some narrative upon the mess of synthetic text. We have built a complicated set of funhouse mirrors to reflect back to ourselves all our sci-fi addled fever dreams of robotic revolution, not to mention two and half millennia of likening automatons to slaves (check out Book 18 of the Iliad on this).

The chance of AGI emerging from a cluster of AIs digesting their own excrement is about zero, according to the rigorous statistical analysis in my gut. There are other dangers, of course, which arise from the fact that AI is cultural technology rather than a novel form of cognition. Moltbook is a privacy disaster for any humans who have turned their credentials over to an AI agent, for instance, and the AI paradise is also vulnerable to poisoning by humans lurking in its depth. So is this all interesting and worth studying, yes definitely, but is it a harbinger of the robot apocalypse, definitely not.

[edited to fix several typos]